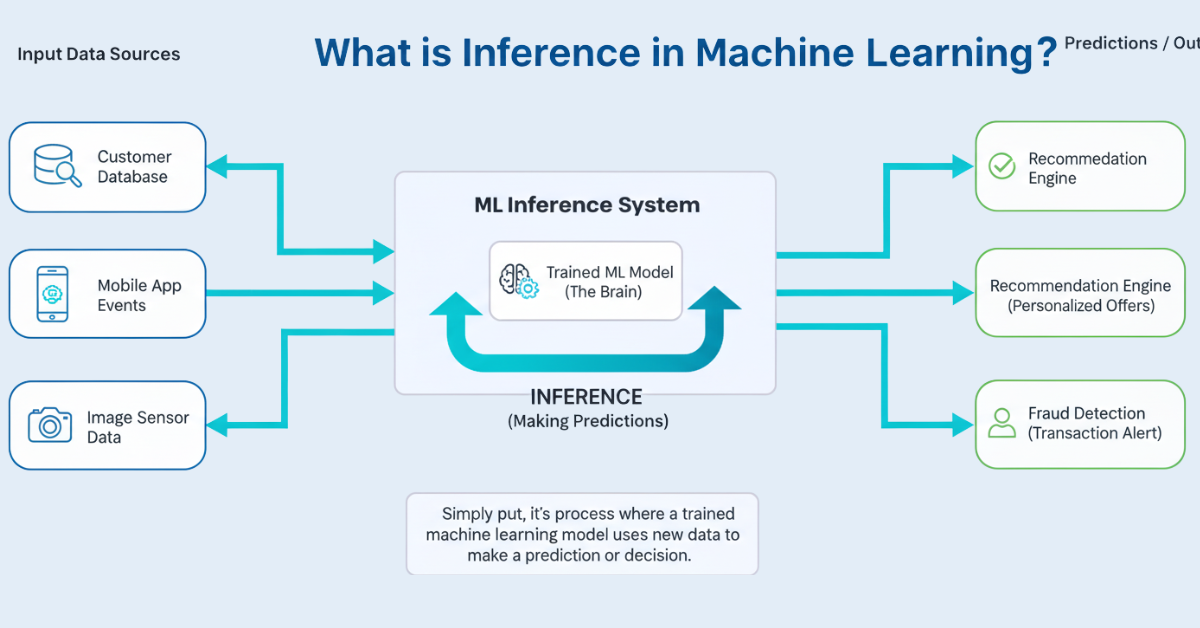

Machine learning does most of the work behind several common tools, including email spam filters, suggestion mechanisms, and speech helpers. They all rely on a crucial operation: inference. So, what happens when these tools make choices after they have been trained?

Inference refers to the point where a previously trained machine learning model employs new information to foretell or make a decision using the patterns it grasped during training. Picture the model as being akin to a student who has studied for an exam—the learning phase has concluded, and it is now time to apply this knowledge to fresh data.

This mechanism drives various applications in reality. Whether it be identifying fraudulent transactions in banks or diagnosing sicknesses in medicine, inference changes raw data into relevant outcomes. Knowing how inference operates helps comprehend why certain AI systems react quickly while others are slower, and the significance of accuracy once the model is active.

Details regarding Inference

Inference comes after the process of training the machine learning model is completed. During training, the model analyzes numerous instances to learn the latent principles, connections, and regulations within the data. When training is over, its parameters are adjusted; no changes will occur.

Once a trained model is used, it’s time for the inference phase. In this phase, new, never-before-seen types of data are fed into it. The model employs previous experiences from analyzing fresh data and produces predictions. For instance, if your model is designed to differentiate between dogs and cats, it classifies a newly added photo as one of these two categories.

Speed and function are the primary distinctions between training and inference phases. The model requires time to learn and improve during training, whereas quick answers are necessary during inference. For example, recommendation systems must generate product suggestions much faster than one second, plus medical imaging devices have to deliver diagnostic aids without delay, so as not to endanger patients.

Inference appears mainly in two modes: batch inference analyzes a large volume of information simultaneously without regard to processing speed, while real-time inference generates answers immediately for single requests. For instance, banks usually detect fraud at night by analyzing thousands of transactions, whereas search engines use real-time inference to rank results right after typing queries.

Every model that gets deployed depends on inference to provide value. Otherwise, all those hours spent training and computing resources yield no use for end users.

Comparison of Training and Inference

It’s essential to know how training differs from inference because they have different requirements plus limitations.

While Training:

- The model is educated with labeled information

- The parameters continue to change constantly

- Speed is not as significant as the precision

- Large quantities of data are needed

- Plenty of computing power is usually available

- The procedure might take several hours, days, or weeks

While Inference:

- The model utilizes what it has learned on new data

- The parameters remain unchanged

- Speed is of utmost importance, along with efficiency

- Sometimes, just one data point gets processed

- Computing resources could be scarce

- Answers ought to come back either within seconds or milliseconds

For instance, with facial recognition systems, many images containing faces are shown during training so that the model can learn to differentiate between various human faces—this also requires much time and computing power. However, inference occurs whenever a camera captures someone’s face; thus, instantaneously recognizing who they are requires rapid response capabilities on the part of the model.

Different metrics matter too; for training purposes accuracy rate plus loss ratio metrics are tracked while latency rate in milliseconds per response turns out critical for inference purposes along with throughput capacity indicating number of predictions/second made by system should also be monitored.

Such distinctions are vital in systems building, where powerful servers may be suitable enough during training, whereas mobile phones serve as deployment devices for inference. During training, maximized accuracy could be prioritized; minimizing latency takes precedence during the inference phase, making better designs possible for real-world implementations.

How Inference Works in Real Applications

Inference is not just theory. It powers our everyday systems. Let’s see how it works.

E-commerce and Recommendations

When you visit an online store, inference suggests products to you. It considers what you bought and viewed before, and what other people like you bought. It quickly sorts out thousands of options and displays only the most relevant ones. Each time the page reloads, inference works again.

Healthcare and Diagnostics

Radiologists use AI to analyze X-rays and CT scans. It identifies areas of concern in images. It does not merely say normal or abnormal. It highlights regions with high probability and assists doctors by providing the needed details for making better decisions. Thus, inference helps improve diagnosis.

Financial Fraud Detection

Banks run inference on every transaction. It checks if a transaction fits typical spending patterns for that user. For example, if someone usually buys food, but suddenly tries booking a flight abroad, this looks suspicious. Inference flags such cases for further review without making any noise.

Natural Language Processing

A voice assistant employs inference to interpret what you say. It consists of various steps: recognizing speech, comprehending language, and generating responses. Inference executes all these within seconds.

Inference makes trained models effective and practical in solving actual challenges. Just having such a model is not enough when inference isn’t occurring.

Types of Inference Models

Different models exist for diverse applications; thus, it pays off to know them.

1. Classification Models

They categorize data points into groups. Email systems classify messages as spam or not. Medical systems categorize tumors as benign or malignant. Classification inference produces labels along with confidence scores upon submission of new data.

2. Regression Models

They predict continuous numeric values such as house prices, stock prices, or temperature values to come next. Regression inference outputs numeric predictions instead.

3. Object Detection Models

They locate objects within an image or a video. Self-driving cars use it to identify pedestrians and other cars, while security cameras use it to detect people and suspicious items, etc. Object detection reveals not only what objects are present but where they’re located.

4. Time Series Models

These analyze patterns over time so as to predict future occurrences. For instance, demand forecasting, power consumption forecasting, traffic congestion prediction, etc., Time series inference utilize historical records while projecting ahead.

5. Natural Language Models

They handle text processing capabilities including translation, chatting purposes and summarization jobs among others. Each single inference event either comprehends some texts or creates new ones.

One has to choose which type of model, depending on the question asked, as well as what output makes sense for the problem at hand. Each classification bears its unique considerations, plus attributes during inference.

Final Thoughts

Inference bridges trained ML models between theory and practice hence addressing real-life requirements efficiently by means of speed, precision, scalability, etc., You’ve now comprehended what occurs post-training stages involved, how these differ from training processes, plus where these exist within the actual applications.

Inference does not only entail predictions but also doing so expediently, dependably, plus at scale levels, especially large ones, without any glitches resulting therein, which makes solving challenges easier, although these kinds exist. Applications like recommendation systems, fraud detection, and medical diagnostics employ inference, which gives them value through understanding their procedure, thus enabling you to appreciate better how AI works, plus what goes behind making it operationally feasible.